因为一些平台,比如 groq、openrouter 等,提供的免费模型一般都有限制上下文,那是不是可以做一个中转,在实际进行模型调用前进行 token 计算,对于消息 token 数较短的请求就使用这些免费模型来响应,对于 token 数长的请求就正常调用。

正好,我最近发现这么一个项目

代码放在这里了

import { Plugin } from 'llm-hooks-sdk';

import { estimateTokenCount } from 'tokenx';

const TOKEN_ESTIMATE_ERROR_RATE = 1.1;

export default {

beforeUpstreamRequest({ data, logger, metadata }) {

const model = data.requestParams.model;

if (!metadata[model]) {

return null;

}

let modelContextThreshold: number;

try {

modelContextThreshold = Number.parseInt(metadata[model] as string);

} catch {

return null;

}

const messages = data.requestParams.messages;

const messageContents = messages

.filter(message => message.content &&

typeof message.content === 'string')

.map(message => message.content as string)

.join('');

const totalTokens = estimateTokenCount(messageContents);

logger.info(`Total tokens: ${totalTokens}`);

if (totalTokens * TOKEN_ESTIMATE_ERROR_RATE < modelContextThreshold) {

const freeModelId = model + ':free';

logger.info(`Routed to free model: ${freeModelId}`)

return {

requestParams: {

...data.requestParams,

model: freeModelId,

},

};

}

return null;

},

} satisfies Plugin;

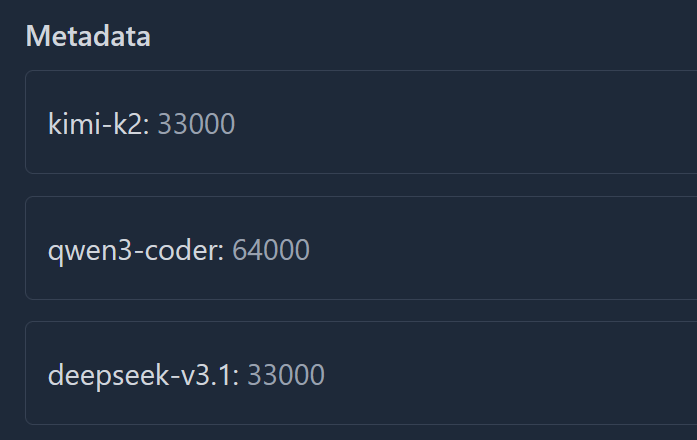

在 LLM-Hooks 中添加插件脚本,并像这样配置 metadata 后

插件在检测到上下文低于阈值时会自动在模型名称上加上 :free 后缀,从而实现自动切换到免费短上下文模型的效果。

附一些实际使用时的日志:

[15:07:56.279] INFO (37): Total tokens: 34103

module: "hook: beforeUpstreamRequest | plugin:context-router.ts"

[15:08:00.398] INFO (37): Total tokens: 33698

module: "hook: beforeUpstreamRequest | plugin:context-router.ts"

[15:08:23.567] INFO (37): Total tokens: 33953

module: "hook: beforeUpstreamRequest | plugin:context-router.ts"

[15:13:22.400] INFO (37): Total tokens: 18

module: "hook: beforeUpstreamRequest | plugin:context-router.ts"

[15:13:22.400] INFO (37): Routed to free model: deepseek-v3.1:free

module: "hook: beforeUpstreamRequest | plugin:context-router.ts"

[15:13:43.353] INFO (37): Total tokens: 35

module: "hook: beforeUpstreamRequest | plugin:context-router.ts"

[15:13:43.353] INFO (37): Routed to free model: deepseek-v3.1:free